Modern computing relies heavily on processing high-quality 3D graphics and video, from games and design programs to AI applications and cryptocurrency mining. Driving this visual computing revolution are graphics processing units, or GPUs, specialized electronic circuits designed to rapidly manipulate and alter memory to accelerate the creation of images. In this in-depth guide, we will explore the history of GPU development, how these processors work, key internal components, performance metrics, and the continued innovation propelling graphics technology into the future.

Early Graphics Hardware

The earliest programmable computers of the 1940s-50s lacked dedicated graphics capabilities. Mainframe systems from IBM and UNIVAC displayed text only, while early workstations in the 1960s had limited vector graphics support. Standalone graphics processors began emerging in the late 1970s, including the Texas Instruments TMS9918 and Genesis, to render 2D sprites and simple 3D wireframes. These chips had fixed-function “blitter” style architectures, moving blocks of memory for basic drawing.

Birth of the GPU

In the mid-1990s, the first true general-purpose GPUs were developed as standalone boards to accelerate 3D graphics for the emerging gaming market. The 3dfx Voodoo Graphics, released in 1996, was a hit, using separate texture and geometry processors with a dedicated memory system. Nvidia’s Riva TNT in 1998 established the company and introduced 2D/3D acceleration along with OpenGL support. ATI entered the scene in 2000 with the Radeon 7500. These discrete GPUs offloaded rendering tasks from the CPU, supercharging 3D performance.

Integration onto Motherboards

As 3D gaming took off, GPUs moved from add-in cards onto motherboards. ATI launched the Rage 128 in 1999 as one of the first integrated GPUs, paired with a CPU on the same die. Nvidia’s GeForce 256 followed as a standalone chip. Integrated graphics allowed basic 3D in mainstream PCs and laptops, while discrete GPUs continued advancing high-end gaming. The shift to DirectX further cemented GPUs’ role in 3D acceleration across Windows systems.

Programmable Shaders

A major breakthrough came in the early 2000s as GPUs added programmable shading capabilities via vertex and pixel shaders. These small programs, written in languages like Cg and HLSL, could manipulate vertices and pixels during rendering for advanced effects. Nvidia’s GeForce 3 in 2001 brought vertex shaders, followed by ATI’s Radeon 9700 with full vertex+pixel programmability in 2002. Shaders unlocked far more complex and dynamic lighting, textures and transformations.

Unified Shader Architecture

As shader programs grew more complex, GPUs transitioned to a unified shader model where the same multiprocessors handle all shader stages. Nvidia’s GeForce 8800 GTX, released in 2006, was a milestone, using a unified shader pipeline across 128 stream processors. This “many little cores” approach paved the way for today’s massively parallel GPU designs. Standardized APIs like DirectX 10 and OpenGL 3 accelerated adoption of unified shaders across the industry.

Explosion of Parallelism

GPUs have continued expanding their parallelism through increasing core and thread counts. The GeForce GTX 480 of 2009 contained 480 shader cores running thousands of threads concurrently. Modern high-end GPUs can have over 10,000 cores running tens of thousands of threads in parallel. This massive parallelism is harnessed through APIs like CUDA, OpenCL and DirectCompute to accelerate general-purpose compute tasks beyond just graphics.

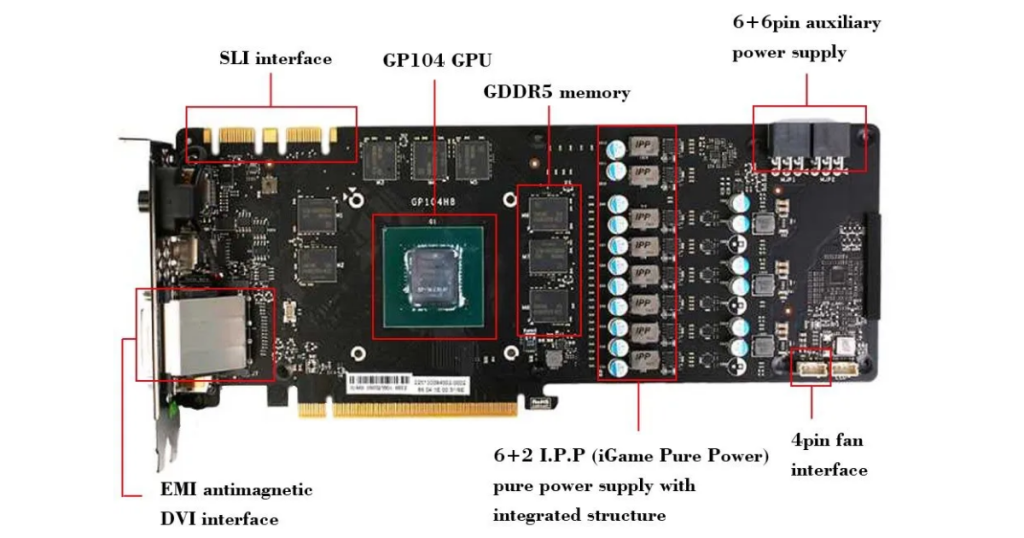

Key Internal Components

While GPU designs vary by manufacturer, they share common internal components optimized for parallel workloads:

– Multiprocessors: Contain dozens of smaller cores that run in SIMT (Single-Instruction Multiple-Thread) fashion.

– Cores: Simple in-order RISC cores that each handle one thread at a time through a warp/wavefront scheduler.

– Threads and Warps: Cores execute threads in groups of 32 called warps to optimize parallelism and hide memory latency.

– Shared Memory: High-bandwidth, low-latency memory shared within a multiprocessor for thread communication.

– Register File: Per-core registers for quick thread data access.

– Local/Global Memory: Higher latency but larger video memory for textures, images, buffers.

– Cache Hierarchy: Data/texture caches optimize memory bandwidth usage.

– ROPs: Raster operation units finalize pixel color/depth writes to framebuffer.

– Async Engines: Hardware schedulers that overlap computation and memory transfers.

– Floating-Point Units: Dedicated FP32 and sometimes FP64 arithmetic logic.

– Ray/Tensor Cores: Specialized cores for ray tracing, AI workloads in latest GPUs.

Performance Metrics

When comparing GPU performance, key metrics include:

– Compute Units: Number of multiprocessors/streaming processors.

– CUDA/Shader Cores: Number of individual cores within compute units.

– Memory Interface/Bus Width: Memory bandwidth in GB/s.

– VRAM: Total video memory in GB using GDDR or HBM technologies.

– TFLOPS: Floating-point operations per second, a measure of raw compute.

– Benchmark Scores: Standard tests like 3DMark measure graphics/gaming prowess.

– TDP: Thermal design power or typical power draw that must be dissipated.

– Manufacturing Process: Smaller nodes allow more transistors and power efficiency.

Major GPU Families

The top GPU manufacturers each offer several product lines targeting different market segments:

– Nvidia GeForce: Gaming-focused with RTX and GTX models. Top consumer cards.

– Nvidia Quadro: Professional workstation cards with higher precision, drivers.

– Nvidia Tesla: High-end data center accelerators used for AI, HPC applications.

– AMD Radeon: Consumer graphics with RX models competing with GeForce.

– AMD Radeon Pro: Workstation graphics to rival Quadro offerings.

– AMD Instinct: Data center GPUs optimized for machine learning workloads.

– Intel Arc: Newest entrant targeting laptop/desktop graphics initially.

Specialized GPU Uses

Beyond graphics, GPUs now power diverse workloads through parallel computing APIs:

– Game Development: GPU ray tracing, physics simulations accelerate creation.

– Video Editing: Hardware encoding, effects, rendering boost nonlinear editing.

– Machine Learning: Deep learning frameworks leverage GPUs’ matrix math prowess.

– High-Performance Computing: Fluid dynamics, molecular modeling, astrophysics simulations.

– Cryptocurrency Mining: GPUs effectively solve computationally-intensive hashing.

– Rendering: Production-level 3D rendering leverages GPU farm acceleration.

– Virtual/Augmented Reality: Massive pixel fill rates and low latency enable immersive VR/AR.

Advancing GPU Technology

Continued innovation will further GPU capabilities in key areas:

– Ray Tracing: Hardware ray tracing allows photorealistic rendering for games, design, VR/AR.

– Tensor Cores: Dedicated AI inference accelerators boost neural network performance.

– Mesh Shaders: Advanced geometry processing enables complex virtual worlds.

– Variable Rate Shading: Prioritizes shading where human eye is most sensitive.

– Memory Advances: HBM, GDDR6X increase bandwidth. HBM3 and beyond on horizon.

– 3D Packaging: Multi-chip modules stack logic and memory for density/efficiency gains.

– Optical Interconnects: Replacing wires with faster, lower power optical links.

– Neuromorphic Computing: Spiking neural networks modeled after the human brain.

– Quantum Computing: Potential for quantum GPUs to emerge once quantum technology matures.

Also Read: Guide to Computer Processor Design and Development

Conclusion

From simple 2D accelerators to today’s massively parallel multi-teraflop behemoths, GPUs have revolutionized visual computing and transformed countless industries. Their continued advancement driven by gaming, AI and other demanding workloads ensures graphics processors will remain at the cutting edge of semiconductor innovation for the foreseeable future. With specialized architectures perfectly suited to parallel tasks, GPUs show no signs of slowing down their remarkable rise.